Part of brain that understands social interactions located

A research team recently identified the part of the brain that understands social interactions. They found this region is not only sensitive to the presence of interactive behaviour, but also to the contents of interactions.

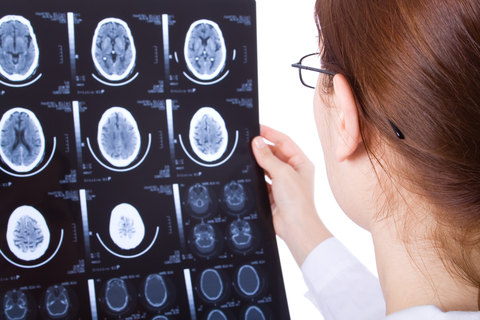

In a study led by Dr Kami Koldewyn, of the Developmental Social Vision Lab at Bangor University, researchers used functional magnetic resonance imaging (fMRI) to measure the responses of participants to brief video clips. These depicted two human figures either interacting or not interacting with each other.

Writing in the Conversation, Jon Walbrin PhD Researcher in Cognitive Neuroscience, Bangor University, says a single brain region, the right side of the posterior superior temporal sulcus (pSTS), was the only part of the brain that could differentiate between interactive and non-interactive videos.

This isn’t the first time that the pSTS has been linked with processing social information. The pSTS and broader temporal lobe region are known to be sensitive to other categories of social visual information. This includes faces and bodies, as well as theory of mind processing, that is, when actively thinking about what’s going on in another person’s mind.

To figure out whether interaction sensitivity in the pSTS is actually due to differences in face, body, or theory of mind processing instead, the researchers ran a second fMRI experiment. Here the videos that participants watched were very carefully controlled. These new videos did not contain sources of social information other than actions, so any pSTS sensitivity should have been from the interactive information.

Removing faces and bodies from videos about human social interactions seems almost impossible, if not inherently eerie. But the researchers designed a special set of animations that showed 2D shapes moving “purposefully” around a scene. Previous research has shown that carefully controlling how shapes like these move can create the strong impression that the shapes are “alive” and moving in an intentional way. These are much like the meaningful actions that humans might perform, for example opening a door or pulling a lever.

Using these videos, the researchers looked at two aspects of how the pSTS processes visual interactive information. First, they analysed whether it could tell two interacting shapes from two non-interacting shapes, similar to the first experiment. Then they looked at whether the pSTS could tell the difference between two different types of interactions; competition, where for example, one tried to open a door, while the other tried to close it, and cooperation, where the shapes worked together.

As predicted, the right pSTS was able to reliably discriminate between interaction compared to non-interaction videos, a finding that complements what was seen in the first experiment. Similarly, the pSTS could also reliably discriminate between competitive and cooperative interactions. Together, these findings demonstrate that the pSTS is a region centrally involved in processing visual social interaction information.

However, it seems unlikely that one small chunk of brain could be entirely responsible for such a complex and dynamic process. So the researchers also compared responses in a neighbouring brain region, the temporo-parietal junction, which is associated with theory of mind processing, and so may also contribute to interaction perception. They observed that, like the pSTS, the junction could reliably tell apart interactions from non-interactions, and competition from cooperation, although to a weaker extent.

These findings show the crucial role that the pSTS, and to some extent, the temporo-parietal junction play in perceiving visual social interactions. However, the results also open up a lot of interesting questions for future interaction research. The researchers don’t yet know which type of interactive information is most important in detecting whether two people are interacting. Nor do they know how pSTS interaction responses differ in people that tend to show atypical social visual responses, such as with autism spectrum disorders.

This research was was funded by a European Research Council “Becoming Social” grant, and the findings were published in Science Direct.